Super-Speed Storage

What’s the best storage option for you?

Once upon a time, there was just one simple solution when it came to computer storage. The ever reliable, infallible spinning hard drive. That signature sound of those platters whirring into life undoubtedly roused the spirit of many a gamer, knowing that within mere tens of minutes they would be sitting comfortably, ready to load their favorite 32-bit game. It was a technology that, although archaic by today’s standards, developed exponentially for its time. First there was IDE, then there was SATA, then the second generation of SATA, and finally SATA revision 3.0, the pinnacle of SATA technology. An interconnect designed and developed to help support and provide compatibility for all future storage devices and drivers, for the next generation of HDD devices.

But something happened, an event that would shape mankind throughout the ages. An event we like to call SSD-Gate. Actually, no, that was a lie. A bad one. We just made that up. But still, it was pretty revolutionary. In 2008, Intel released the X25-M SSD, one of the first commercially available SSDs. Featuring mind blowing speeds of up to 250MB/s read and 100MB/s write, PC enthusiasts were hooked, and so the craze began. Soon we would all be running the typical combo—as SSD storage was so ridiculously expensive at the time, the most common setup was to use an SSD for your OS and a traditional hard drive for all your games, media, and other files. However, even SATA 3 had its limits. Eventually SSD speeds would overtake that now aging platform, decrepit well ahead of its time, forcing the powers that be to find new and inventive ways around this annoying problem. Fast-forward to 2015, and the nature of the beast has changed entirely. It turns out that manufacturers don’t like bottlenecks. Indeed, they hate them. We now live in a time when there are RAID 0 arrays, M.2 drives, PCIe cards, and all sorts of future tech right around the corner that makes the revolutionary SSD look as good as Donald Trump’s “hair.” Forget Moore’s law, let’s talk about SSD speed and capacity acceleration. And which wonderful and lovely storage options are available to you today? Which one should you choose? And how much bang are you getting for your buck? Read on to find out more.

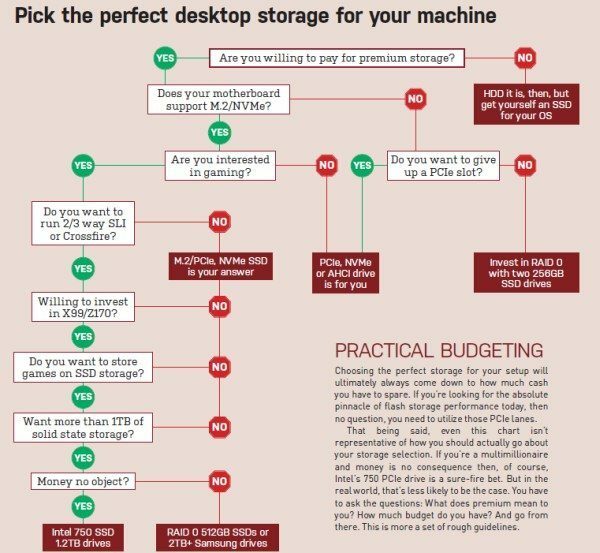

OVER THE LAST 20 YEARS, storage has changed. A lot. From hard disks and IDE connectors to all three generations of SATA, ultimately it’s always been the connection standards that have been the bottleneck. When SATA 6Gbps was first developed, it wasn’t expected that we would reach today’s speeds so quickly. Indeed, with SSD and NAND flash far outstripping SATA 3’s rated connectivity speeds, it seems far-fetched to believe that SATA 3 would have kept us going for this long. And so, as is often the way when PC enthusiasts are presented with a bottleneck, the manufacturers tried to find solutions around this gargantuan wall, to pry our hard-earned cash out of our wallets and into their pockets. The initial quick-and-easy solution was to use an old trick: the RAID array. More often than not used for redundancy rather than speed, RAID 0 provided break-neck connectivity by splitting data and files in half between two disk drives, theoretically allowing data to be pulled off both of the drives at the same time. Then came the push to utilize PCIe, an interface that we still haven’t managed to saturate. You can easily transfer upward of 120Gbps, nearly 20 times more than SATA 3. After that came M.2, a smaller form factor laptop drive originally designed to operate in a similar way to mSATA. The M.2 interconnect had one particularly interesting asset: it integrated directly into the PCIe bus, giving it the ability to take full advantage of the expanded bandwidth and increased speeds of that platform, increasing NAND flash performance almost fourfold. Impressive. Ultimately, storage connectivity has always depended on one component—the motherboard. The more modern the motherboard, the more likely you are to be able to support these new storage standards. Intel’s latest Skylake chipset, the Z170, supports 20 PCIe 3.0 lanes. This is in direct response to the increased number of people using those same PCIe lanes for storage as well as graphical horsepower. Because the storage utilizes PCIe lanes, you lose out on the number of lanes available for your GPUs— in some scenarios, if you’re using SLI or Crossfire, it may not be possible to install an M.2 PCIe card without the additional lanes provided in the latest Z170 chipset. With Intel increasing the number of PCIe lanes in its chipsets and processors, the uptick of PCIe storage seems almost inevitable. Unless you’ve been stuck under a rock for the last three years, you’re probably aware that 2.5-inch SATA SSDs have dramatically dropped in price.

It’s now possible to pick up a 500GB drive for a third of the cost of what it was in 2011. The thing is, while the 2.5-inch form factor is fantastic in smaller builds, it is now limited by its interconnect throughput. Performance, although far better than the now almost defunct spinning hard drive, lacks in comparison to today’s PCIe-based storage. In fact, the only way to alleviate these problems with SSDs is by removing the sticky SATA barrier. To do this, there’s only one solution: build a RAID array.

There’s a plethora of RAID arrays to choose from—however, the most common ones you’ll come across are RAID 0, 1, 5, and 10. RAID 0, possibly the most interesting of the four, requires a minimum of two drives. Essentially, the principle is fairly basic: split the data across both hard drives, then read or write off both of them simultaneously to provide an impressive performance boost. The one major downside to this is that if one of your drives fails, you lose all of your information with no chance of recovery. Although in today’s climate that’s a pretty rare occurrence, it’s still advisable to keep the vast majority of your valued files offsite. After all, you want to make sure that you’ve got at least one backup, and ideally you want to have a backup for your backup as well—we wouldn’t want to be around when your primary system and your backup fails. Heh, Star Trek.

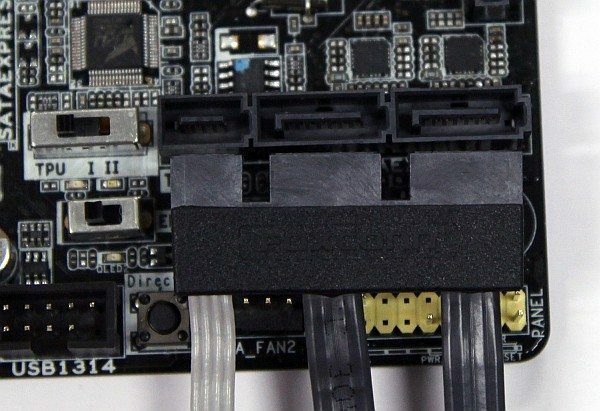

Anyway, back to the point: keeping your OS and some games on your array is often the best solution, especially in today’s modern age of cloud storage and super-fast download speeds, when reinstalling an OS doesn’t take a week. To set up and install a RAID 0 system, you need to use your BIOS, Intel’s Rapid Storage Technology, and at least two drives. Instructions can be found in your motherboard’s user manual, however, changing the “PCH Storage” option from “AHCI “to “RAID” should do the trick. Then it’s a simple case of rebooting and mashing Ctrl-I to get into the Intel Rapid Storage Driver, and creating your RAID array from there. Three Samsung 850 Pros in RAID 0 provide us with a grand total of 384GB of storage, and performance speeds in synthetic benchmarks ranging anywhere from 1,200MB/s to 1,500MB/s read and 1,000MB/s to 1,100MB/s write. This solution really shines in desktop copies and transfers, reducing overall copy time by half, making for a much smoother experience, and an instantaneous response when moving folders with photos. But wait, there’s more.

RAID 0 isn’t your only option. RAID 1 uses exactly two drives, and mirrors your data across both at the same time. The benefit here is that if one of your devices fails, there’s always a backup. A hardware RAID controller can also potentially read from both drives for improved speeds too. RAID 5 is a little more complex. It requires a minimum of three drives, and stripes the data across the drives along with a rotating parity block. It detects when there may be problems on either of the other drives and migrating system-critical information from the damaged or decaying drive on to one of the others. This is often used in NAS devices or servers, where multiple people may be using the array at any given time. Then there’s RAID 10, the king of money spending, storage, and dependability. It takes the best parts of both RAID 0 and RAID 1, and merges them together, simultaneously mirroring and striping the data between the number of drives you have available. You need a minimum of four disks to do this, and you lose half of your storage capacity in the process, but it’s the most effective and efficient way to use SSDs—just not for those looking at cost-effective storage solutions. Ultimately, RAID 0 provides a cheap, fast, easy, eye-pleasing solution to modern-day storage woes. Although the synthetic benchmark speeds often don’t transfer well into gaming scenarios, the snappiness you’ll find on desktop file transfers will be enough to make any PC enthusiast crumble. The only problem with it is boot times. If you’re looking for a superfast startup, you’re more than likely still going to want to utilize just a single SSD. Intel’s rapid storage boot manager does take a considerable amount of time to get past, and even though you benefit from those speedy read times, you’re still going to suffer because of it.

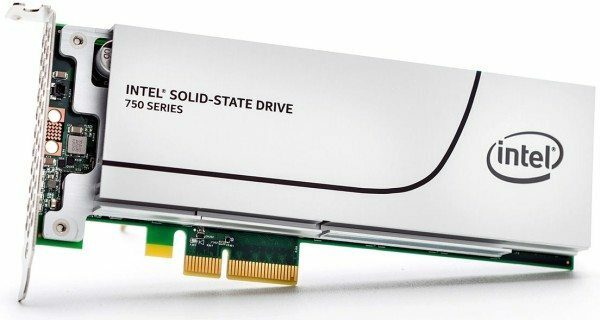

The most exciting advancement that we’ve seen over the last year or so has been the continued push into the PCIe SSD—allow us to explain why. Utilizing the PCI Express interconnect to deliver stunning storage speeds is a fantastic step forward, and undoubtedly where the future of storage lies. Originally hampered by insane levels of cost, the price of the PCIe SSD has dropped dramatically over the last year. Indeed, per gigabyte, it’s now half the cost of the most expensive SSD. Still sounds like a lot, but ultimately it’s far cheaper than when it debuted back in 2012.

Currently, it’s around 92 cents per gigabyte for an Intel 750 SSD, or 86 cents per gigabyte on Samsung’s OEM SM951 versus 54 cents per gigabyte for a Samsung 850 Pro SSD. But considering you’re getting almost four times the performance by using an M.2 drive, it’s more than costeffective and, if SSD prices are anything to go by, it’s not going to be long until these drives cost the same as SSDs do today. Hardware and connection speeds haven’t been the only problems manufacturers have had to face. In fact, they’re only the start. The biggest conundrum has been how to surpass the aging AHCI protocol. Essentially a software interface to help convert the physical interface’s information, AHCI was designed for spinning drives and high-latency devices—nothing comparable to today’s NAND flash storage. Although SSDs still work quite efficiently on this, a new software interface was needed. Welcome to NVMe, a collaborative project worked on by over 80 members of a consortium, directed by Samsung and Intel. NVMe (Non-Volatile Memory Express) was designed to work with both SSDs and PCIe going forward. Ultimately, the biggest problem in the enthusiast arena has been aesthetics.

When manufacturers first introduced their lineups of PCIe SSDs, they came covered in a lovely shade of green PCB. On top of this, you lose out on a PCIe slot, which for more aesthetically minded system builders, can ruin the look of a good build. M.2 PCIe drives do little to alleviate the situation. Raised above the board, they suffer from the same drawbacks, with the vast majority including that signature shade of grass-green PCB. Indeed, it’s only recently, with the launch of Samsung’s 950 Pro, that the first all-black consumer-grade PCB has been seen in this department. So, for a while now, the industry has been looking for a way to improve the connectivity speeds of the traditional 2.5-inch drive, and although M.2 drives are incredibly powerful and efficient, they still have flaws—namely, thermal limitations and drive capacity. Although Samsung has just announced its first 1TB M.2 drive, these devices will no doubt come at a great cost to the user and aren’t going to be available in the market until some time later next year. Welcome to SFF-8639. A connection standard that’s been used in enterprise-grade systems for some time now, it’s finally making its way to the consumer side. Notably with a rename: U.2 (not the band; it’s pronounced you-dot-two), bringing it more in line with M.2 and making it a little easier to remember. U.2 still features the same access that M.2 has, utilizing four PCIe 3.0 lines, and still promises the same speed, just in the traditional 2.5-inch form factor. The only downside is that, for the time being at least, the cable is rather bulky, and Intel plus a few other select board partners are the only ones supporting it. In the end, these drives are no doubt the future of storage expansion. As more memory chip manufacturers migrate to PCIebased devices, it’s inevitable that these devices, especially those utilizing NVMe, will become the SSDs of tomorrow. With stunning performance, low cost, low power usage, and small form factors, is there any doubt as to which storage solution has won the war?

Ahhh, the M.2 form factor, the golden era of computing, following Moore’s law admirably—as transistors get smaller and smaller, memory follows suit. With companies such as Samsung and other storage conglomerates pushing memory chips with up to 48-layer V-NAND, it was surely only a matter of time before a smaller form factor storage device was achieved. Indeed, long gone are the days of clunky 3.5-inch drives. In fact, if you were to buy any modern Ultrabook, there’s no doubt that you’d be picking up one of these bad boys with it, whether you knew it or not. Although we’ve spoken about M.2 in depth already, there’s more than just the traditional all-out speed demons. In fact, you can get M.2 drives that are as fast as today’s standard SSDs. Now, we know what you’re thinking—why on earth would you want that? Well, simply put, M.2 drives are ridiculously less complicated to build than their 2.5-inch form factor counterparts, and because of this, it makes them extremely affordable and accessible to the vast majority of us. Indeed, you can get your grubby little hands on a Crucial BX100 500GB M.2 SSD, making it the cheapest out of all our storage solutions, at just 32 cents per gigabyte.

Alternatively, the speedier MX200 250GB can be had for $95, or 38 cents/GB. The downside is motherboard and device compatibility. You’ll need to ensure your motherboard has at least one M.2 slot, that it can support the size of M.2 drive that you’re going to purchase, and that you’re willing to give up those PCIe lanes as necessary. Additionally, there’s a whole assortment of NGFF (nextgeneration form factor) sizes, depending on your needs, ranging from 2242, 2260, and 2280, all the way up to 22110—although, to be honest with you, we haven’t even seen the latter sized drive. There’s another downside to M.2, and that’s how it looks. With only a select few companies utilizing black PCBs, they can stand out rather dramatically in comparison to the more traditional SSD. They do, however, provide a fantastic solution if you’re not bothered about appearances, have a nonwindowed case, or are utilizing a NUC or some other small form factor device.

Well, as you probably already know, SATA 3 has always been the problem child of storage speeds. Hell, we’ve hit on that enough during this very feature. However, M.2 and tapping into PCIe lanes wasn’t the first solution to the problem. An additional physical interface came in the form of SATA Express. Essentially taking up two SATA 6Gbps lanes and some additional power, it’s an interface that enables speeds of up to 1,959MB/s read and write by utilizing the same PCIe lanes that M.2 and U.2 now occupy. Unfortunately, as a standard, it just never took off, certainly not in the way that PCIe storage or SATA originally did. There were a couple of SATA Express SSDs out there, but nowhere near enough to provide any form of available market. Indeed, companies such as

Asus have now taken that interface and even created front-bay devices using USB 3.1 and providing up to 100W of power to find at least something to do with it. To wrap up serial ATA, we’ve got to look at mSATA, which stands for mini-SATA. Essentially a smaller form factor SSD, mSATA was most commonly found in notebooks and early small form factor devices before being replaced by the higher speed M.2 devices. It does, however, utilize the SATA host controller, as opposed to the PCIe host controller, meaning you don’t lose out on those valuable PCIe lanes in smaller form factor builds. Something that’s become null and void over the last year or so.

And there you have it, folks—that’s the vast majority of super-fast storage solutions available to you today, and wow, does it look like a doozie. RAID 0 provides some impressive figures for its low price, but PCIe storage solutions will be the clincher going forward. If it wasn’t for the innovations we’ve seen with manufacturers using the PCIe physical interface, RAID 0 may have taken the win. Alas, its limitations have been reached and spooling more and more drives together isn’t a viable solution to our speed woes. With SSD capacity ever increasing (say hello to 4TB Samsung drives coming soon), it’s only a matter of time before they replace the aging hard disks of yesteryear. They’re far more responsive, energy-saving, and noise-reducing than their ancient counterparts, and even using the SATA 3 interface, still very potent. What it comes down to is personal preference and what you need. A 1.2TB Intel PCIe card might be ideal for a workstation-grade computer, rendering 3D models every day, but if you don’t need the horsepower or detest the ugliness of the drive consuming another of your cherished PCIe slots, it’s probably not the solution for you. M.2 is great for small form factors, but again suffers from the same problem—currently, the only way of hiding these drives is by using a motherboard with thermal armor (here’s looking at you, Asus), otherwise you’re stuck with it staring you in the face. RAID arrays are another great solution, less useful for gamers but, all in all, quite easy to set up, and in today’s climate, exceedingly stable. In fact, some of our writers have used RAID 0 SSD arrays for years, with little to no problems whatsoever.

All of that being said, this isn’t the end of storage speed, and indeed this year saw Intel and Micron announce 3D Xpoint, the first new memory storage technology invented within the last four decades. Touting performance figures 1,000 times greater than traditional NAND flash, and endurance to match, these devices are set to hit the stage sometime in 2016. Although Intel hasn’t let on as to how exactly 3D Xpoint works (no doubt in an attempt to fend off potential competitors), roughly speaking, it forsakes the transistor in favor of a resistive material, where the resistance between two points indicates whether the bit of information is a 1 or a 0. Although still not as fast as today’s DDR4 (just), the fact it’s nonvolatile and stackable makes it a potentially revolutionary invention—depending, of course, on whether Intel can bring it to the consumer market. And, of course, we’ll need an additional storage interface to be able to even transfer that amount of information, because read and write speeds topping a whopping 550,000MB/s might be a bit much, even for PCI Express.

So, SSD is still king of the hill. It’s still the most diverse storage solution out there today. It has successfully supplanted the oldschool HDD and cemented its way into our hearts by being the stealthiest good-looking drive out there. As an interface, U.2 is still far too clunky looking, and for gaming, honestly, you don’t need more than that, certainly not for the time being. Perhaps today the best setup is a RAID 0 array for your OS, and then a standard 500GB-1TB drive for your games and media. What ever happens, we’ll be glad to hear the last of the inevitable whir of a mechanical drive as it spins into history, alongside the great noises of our technological past. Farewell platters, farewell dial-up, farewell motherboard beeps!.