HDR AND THE PC: IT’S COMPLICATED

HDR monitors will be awesome. And awfully complicated, says Jeremy Laird

Forget curved panels, frame synching, 4K, and high refresh rates. There’s a new technology that might just blow them all away for sheer visual pop. It’s all about searing brightness and even deeper blacks. It’s about dramatically increasing the numbers of colors a monitor can display. And it’s coming soon to a PC near you. Get ready for HDR, people.

The basic concept of HDR (high dynamic range) is simple. It means stretching out the extremes of display capability—delivering more, even when that means less. But it’s hard to point at any one feature and say, “This is HDR.” Nor is it easy to define in terms of numbers. There is no one metric that definitively determines what an HDR display is. Instead, there’s a number of standards that are competing to become the de facto definition of HDR.

Table of Contents

[sc name=”ad 2″]

Samsung’s JS9500 is an HDR HDTV beast you can buy today.

This is going to cause confusion. Some monitor makers are likely to play a little fast and loose with how screensare marketed. Distinguishing between what you might call a full-HDR feature set and its constituent parts, such as wider color gamuts, is going to be a challenge both for marketeers and consumers. It’s even tricky to define in terms of where it lies in the display chain. Game developers have talked about HDR rendering for years. But no games have output HDR visuals, and there were no displays to support that.

Nevertheless, HDR technology is rapidly becoming the norm in the HDTV market, and it’s coming to the PC. So here’s all you need to know.

What, exactly, constitutes an HDR display? Or should that be an HDR-10 display? Or maybe UHD Premium? Hang on, what about Rec. 2020? And BT.2100, SMPTE 2084, 12-bit color, and wide gamuts?

From the get go, HDR display technology presents a problem. It s difficult to define. Already, armies of competing standards are attempting to occupy HDR’s high ground. Perhaps the best place to start, therefore, is to understand what HDR attempts to achieve. The aim is to simulate reality. Or. more specifically, to converge with the acuity or abilities of the human eye.

That’s because the human eye has limitations beyond which it is futile to aspire. There are things in the real world that humans can’t perceive, whether that’s brightness, or colors, or granular detail. So. there’s little point in trying to replicate them on a display. However, for the most part, what we can perceive still exceeds what displays are capable of. HDR. like several other technologies, aims to close that gap.

A handy example is Apples Retina displays. Pack the pixels in a display close enough together, and the photo receptor cells in the retina—more specifically, the fovea, the most densely populated area of the retina—can no longer distinguish them individually. You’ve matched that aspect of the eye’s capability. Adding more pixels will not improve image quality as perceived Hr by humans. Of course, in this context, much depends on the distance between the eye and

You’ll need HDMI 2.0a connectivity to hook a PC up to an HDR TV.

the screen. The further away the viewing point, the more densely packed the pixels appear. Apple isn’t even consistent about what a Retina display is. and even the most detailed Retina display probably only has around one third the pixel density required to truly match the capability of the human eye. But the ambition to close the gap on the eye’s capabilities is what matters, and it’s what HDR is trying to do. too, only with different aspects of human vision.

The aspects relevant to HDR are broadly captured by the notions of brightness and color. HDR display technology aims to offer a broader range of both. HDR isn’t about adding ever more pixels. It’s about making each pixel work harder and look punchier. Better pixels, not more.

The problem is that HDR isn’t synonymous with a single metric. Color depth, contrast, and brightness are all in the mix. But no single aspect encompasses everything that makes for a brave new HDR display. Moreover, multiple HDR standards exist. Here’s an excerpt from the definition of one of them. Rec. 2100. that gives a flavor of the complexity involved: “Rec. 2100 defines the high dynamic range (HDR) formats. The HDR formats are Hybrid Log-Gamma (HLG), which was standardized as ARIB STD-B67, and the Perceptual Quantizer (PQ). which was standardized as SMPTE ST 2084. HDR-10 uses PQ. a bit-depth of 10 bits, and the Rec. 2020 color space. UHD Phase A defines HLG10 as HLG. a bit-depth of 10-bits, and the Rec. 2020 color space, and defines PQ10 as PQ. a bit-depth of 10 bits, and the Rec. 2020 color space.”

See what we mean? Anyway, let’s dig into the meaning of HDR. starting with color. You may be familiar with the notion of color channels and. more specifically, the number of bits per channel—for instance. 6-bit or 8-bit. To cut a long story short.

For the most part, what we can perceive still exceeds what displays are capable of. HDR, like several other technologies, aims to close that gap.

colors in a display are created by combining three primary channels in the form of subpixels—red. green, and blue, and hence RGB—to give a final target color. The bits per channel refer to the range of intensities available for each primary color channel. By varying the intensities, a range of colors is created, which is a mathematical function of the combined three channels.

BILLION COLOR QUESTION

By way of example, 8-bit-per-channel color, which until recently has represented the high end of consumer display technology, enables 16 million colors. Increase the color depth to 10 bits per channel, and the result is a billion colors. Take it up another notch to 12-bit, and we re talking 68 billion

When it comes to inches-per-buck, TVs have usually looked cheap compared to dedicated PC monitors. But big-screen TVs haven’t always made great monitors, mainly due to the relatively low resolution of TVs. Even a full 1080p HDTV is low res compared to many PC monitors.

Moreover, when you stretch that 1920×1080 pixel grid over a 40, 50, or 60-inch panel, the result is big, fat, ugly pixels. Yuck.

With the advent of6K resolutions, however, things changed. A 6K 60-inch TV makes for a similar pixel pitch as a 27-inch 2560×1660 resolution monitor. Ideal. But the first 6K TVs suffered from another historical TV shortcoming: low-fi display interfaces. Many couldn’t accept a 6K signal with a refresh rate above 30Hz. Fine for movies, no good for a PC monitor.

However, with the wider adoption of HOMI 2.0, it was possible to drive a 6K HDTV at 60Hz from a PC video card. Suddenly, using a relatively cheap 6K HDTV as a PC monitor made sense. The same thinking applies to HDR TVs. Granted, there are limits to how big it’s practical to go with a PC monitor. And the fat, ugly pixel problem reappears as sizes extend toward 50 inches and beyond. But 6K TVs with at least partial HDR support, such as the Samsung KU6300 series, can be had for little more than $600 for the 60-inch model. That’s one hell of a deal.

However, such a screen won’t have much, if anything, by way of PC-friendly features. Forget driving it beyond 60Hz.

It almost definitely won’t have adaptive sync technology, such as Nvidia’s G-Syncor AMD’s FreeSync. It may not offer the greatest pixel response. But perhaps the biggest killer, especially for gaming, is input lag.

For HDTVs, a bit of input lag isn’t a major concern. On the PC, it’s downright horrible. The moral of this particular story, then, is that it’s absolutely essential to try before you buy.

colors. That’s a lot. So how does that map to the capabilities of the human eye?

The target here, or at least one target, is something known as Pointer s Gamut. It’s a set of colors that includes every hue that can be reflected off a real-world surface, and seen by the human eye. How it is calculated probably doesn’t matter. Nor does the fact that there’s a fair bit of variance from one human to the next. What is notable is that it’s only reflected colors—not luminescent colors, which can’t be fully reflected off material surfaces. Hence, even if a display completely captures Pointer’s Gamut, it doesn’t cover everything the eye can see.

However. Pointer s Gamut is far larger than the standard color spaces or gamuts of PC monitors. By way of example, the full UHD Premium specification (which is one of several HDR specs) includes a color space known as Rec. 2020. It very nearly covers 100 percent of Pointer s Gamut. The most common color space PC monitors support is sRGB, which only covers a bit more than two thirds of the colors of Pointer s Gamut.

But supporting sRGB isn’t the same as fully achieving sRGB. In other words, your current sRGB screen probably can’t achieve the full range of sRGB colors, which is a space that’s significantly smaller than Pointers Gamut, which in turn doesn’t encompass every color the human eye can perceive. Put simply, your screen may look nice, but odds are that it’s pretty crappy at creating colors by any objective metric.

To grasp that difference in numbers, simply recall those bits-per-channel. UHD Premium requires a minimum of 10 bits per channel, or a billion colors. Unless you have a high-end pro display with 10-bit color, an HDR screen means a massive jump from around 16 million to at least a billion colors.

The other major part of the HDR equation is. effectively, contrast. It’s a bit more complicated than that because true HDR capability goes beyond mere contrast. To understand why, consider a display that can fully switch off any given pixel. In other words, a display capable of rendering true black tones. Strictly speaking, this is virtually, though not absolutely, impossible for an LCD monitor—there is always some leakage of light through the liquid crystals. It’s theoretically possible to have an individual

Lenovo’s Yoga X1 is one of the first PCs to boast OLED screen tech.

and active backlight for each, but that’s highly impractical. Instead, technologies where pixels create their own light are a far more efficient route to infinite contrast. Which is where OLED displays come in. But we digress, and you can read about OLED displays in the boxout over the page.

The point is that if you have true or nearly true blacks, almost any amount of light constitutes effectively infinite contrast by comparison. So even a really dim screen

Probably the biggest challenge for anyone interested in HDR is knowing what to buy. At best, HDR is an umbrella term that covers a range of technologies and features. However, some standards are emerging that should make buying easier.

Currently, the two best established standards are HDR-10 and Dolby Vision. The most obvious difference between the two is color depth. As its name suggests, HDR-10 requires 10-bit per channel color capability, while Dolby Vision ups the ante to 12-bit. How much difference that will make in practice remains to be seen, but on paper, Dolby Vision is superior in that regard.

The other major difference is support for brightness. HDR-10 supports up to 1,000cd/m2 of brightness for LCD displays, while Dolby Vision goes up to4,000cd/m2, with plans to support 10,000cd/m2 in the future. Again, Dolby Vision is superior, and it is likewise much more expensive.

What’s not clear is whether PC monitor manufacturers will adopt either of these standards. Making matters even more complicated is the fact that a monitor may have some HDR capabilities without being marketed as an HDR display. That’s especially true of any OLED PC display. OLED panels have very high contrast capabilities. Indeed, an OLED display is only required to generate around half the brightness of an LCD display to comply with the HDR-10 standard for that very reason.

Complicating things even further, the UHD Premium standard adopts HDR-10 as a subsection of its requirements. So it’s another label you may see attached to a display, even if it’s not a separate HDR standard in a strict sense. Ultimately, it’s early days for HDR displays, and it’s not clear which standard (or standards) will become the norm on the PC.

would be capable of infinite contrast if the pixels were fully switchable, as per OLED. Thus HDR doesn’t just deal with relative values of brightness, but also absolutes. Again, by way of example. UHD Premium stipulates a maximum brightness of at least 1,000cd/m2 for an LCD screen—roughly three times brighter than a typical LCD.

BACKLIGHT OR BLACK

As for how that’s achieved with existing LCD tech, obviously a more powerful backlight is needed. But. very likely, a backlight composed of subpixels (though not as many as the LCD panel), and thus capable of local dimming, is required. A single, big. dumb backlight cranking out massive brightness would enable greater brightness, but it would also guarantee that the black levels are very poor in some scenarios.

For an idea of what this combination of huge contrast and extreme brightness will enable, imagine the powerful flash of light as a car passes on a really bright day. and the glass momentarily reflects the sun directly into your eyes. Even an HDR screen won’t put out real-world levels of light for such events. But the effect of simulating them on screen will be far. far more realistic.

Displays that capture much or all of these new color, contrast, and brightness capabilities already exist. HDR is the latest big thing in HDTVs, and using an HDR HDTV as a monitor is an option for PC enthusiasts. HDR is also a new feature in the latest refresh of games consoles from Microsoft and Sony. But that same technology is coming to screens designed to be used with PCs. The downside is that it will likely come in many confusing forms. Already, there are inconsistencies with terminology, such as “4K” and “UHD.” which are used virtually interchangeably but are not the same.

Even Asus’s latest 34-inch RoG might look pedestrian when the first HDR monitors arrive.

Then there’s the likelihood that some monitors will support certain aspects of what’s known as HDR. but not others. For instance, you could argue that, for PC gaming, what matters is the contrast and brightness aspects of HDR. along with speed, in terms of pixel response. So you might not want a more expensive and slower LCD panel that’s required to deliver

Whatever, with the arrival of bona fide HDR display technology, the PC games industry is boning up to support full HDR visuals. In fact, it’s partly being driven by the adoption of HDR technology in the latest round of games console refreshes from Microsoft and Sony.

What’s more, converting an existing SDR PC game to HDR is not a particularly onerous task. Straightforward mapping processes can expand SDR color maps to HDR ranges via algorithmic translation without massive effort. So, there’s a good chance that patches adding HDR support to existing games could become widespread in the near future. Nvidia is reportedly working on an HDR patch for Rise of the Tomb Raider, for instance. But it will probably be games with console siblings, such as the Forza, Battlefield, and Gears of War series, that will be the first games to get full HDR capability on the PC. It’s also worth remembering that just about anything, be it games or HD video, will look better on a proper HDR display, even if the content itself isn’t mapped for HDR output.

the wider color gamuts. You might want what amounts to an HDR backlight with local dimming, combined with a TN panel with relatively limited colors. But would that be an HDR monitor? Or something else? Tricky. Other displays may take up the broader color gamut, and leave off the local dimming. It’s very early days, and it will probably take a few years to shake out. especially when you factor in the likely coming transition from LCD tech to OLED.

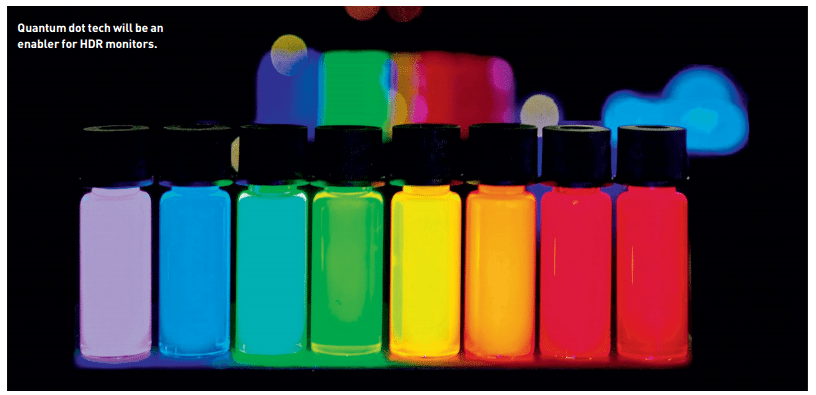

Speaking of that transition, it’s yet to be seen how PC monitor manufacturers will achieve HDR capabilities. If LCD tech is used, a backlight with local dimming is required to achieve the extreme contrast ratios. Quantum dot tech can also be used to bolster the range of colors on offer in combination with a 10-bit or 12-bit LCD panel. But arguably, any attempt to achieve HDR visuals via LCD tech is a bit of a kludge.

Instead. OLED displays with pixels that are their own light source are a much more efficient way to do things. What’s more. OLED technology lends itself better, in cost terms, to more compact PC displays than massive HDTVs. It’s a similar rationale that has seen OLED become common in smartphones. Very likely, therefore. HDR LCD monitors will be at best a stopgap before OLED becomes the dominant solution. With that in mind, it might make sense to consider a cheap HDR LCD TV as your own stopgap solution, while we wait for OLED HDR monitors to become affordable.

CAN YOU HAVE IT ALL?

Things get even more complicated with the technologies needed to achieve UHD color depths beyond the screen itself. With all those colors and ranges of intensity. HDR is seriously bandwidth hungry. HDMI 2.0, for instance, can’t do the full 12-bit per channel at 60fps and 4K resolution—for that you need HDMI 2.0a. Upping the refresh rate to 120Hz and beyond only makes the bandwidth limitation worse. In other words, a display that does it all— 120Hz-plus, adaptive-sync, HDR. the lot—isn’t coming any time soon. Even when it does, your existing video card almost certainly won’t cope when gaming.

You’ll also require a compliant video card to enable HDR visuals. For Nvidia GPUs, that’s Maxwell or Pascal families (GTX 960, GTX 980. GTX 1070. GTX 1080. and so on). For AMD. its Radeon R9 300 Series can do HDR at 60Hz up to 2560×1600. For full 4K 60Hz HDR output, only the latest Polaris boards, such as the RX 480. can pull it off.

Then there’s the question of content. In terms of video, there’s very little out there. It was only in 2014 that the Blu-ray standard was updated to support 10-bit per channel color. HDR photos are in more plentiful supply. Even most smartphones support HDR image capture, achieved by capturing the same image multiple times with a range of exposures, then combining the results into a single image. In theory. HDR video capture works the same way. it ‘s just harder to achieve because of the need to process so much data in real time. As for games, much of the early HDR content on the PC will likely be driven by the parallel emergence of HDR tech on games consoles (see boxout above).

HDR is one hell of a complicated technology: Currently, it’s not clear when the first dedicated HDR monitors will go on sale, which standards they will conform to. or how much they will cost. But HDR is coming. So we’d better get ready.