Keep Your Data Forever

Your data is under threat from many dangers, ranging from hardware damage to malware. Only a smart backup strategy will allow you to keep photos, documents and media safe over the long term

By Christoph Schmidt

Table of Contents

- Smart Back-Ups With System

- Data security in three stages

- Sometimes even the professionals are powerless

- Pros & Cons: Hard drives as data archives

- Failure risk of HDDs

- HDDs: Inexpensive And Large Memories

- For magnetic disks, redundancy is a must

- HDD Defect: Respond quickly and properly

- Making proper use of Windows Access Rights

- NAS: Convenient and secure back-ups

- HDD protection with FreeFileSync

- Back-ups with version history

- Real-time synchronisation on an HDD

- Software alternatives for sync and back-up

- Cloud: ‘Eerily’ Convenient

- Pros & Cons: Cloud storage as an immediate back-up

- Cloud-backup of mobile phone & PC

- Encrypted in the cloud

- Choosing the right provider

- Storage capacities and handy sync software

- Optical Discs: Can’t Be Erased & Long-Lasting

- Service life not unlimited

- Organising and burning data

- Pro & Cons: DVD/Blu-ray as a permanent archive

- M-Disc: The data should become as old as the hills

Important personal data can vanish in a flash, often happening very quickly and always catching us completely by surprise. Such an abrupt death of data can have a variety of causes. According to data recovery company Kroll Ontrack, data is lost most frequently due to undetected storage mediums. In such situations, the drive breaks down without warning due to age or electronic damage. The second most frequent scenario involves cases in which data is lost due to devices being dropped. If the hard drive happens to be running at the time, a physical fall like that results in the reading head being dashed against the magnetic disk, and the magnetic disk is damaged as a result. Apart from this, other situations include damage due to fire, smoke or water. When it came to the data losses recorded by Kroll Ontrack in the year 2016, cases involving water damage (300 cases) were almost twice as frequent as cases involving virus attacks (171 cases). When you encounter such problems, your only hope revolves around the expensive recovery operations carried out in professional data recovery laboratories. However, even this might occasionally yield no result. For example, it won’t be able to help you if an encryption Trojan has launched an extensive attack, or if large amounts of data have been written onto the storage after an unintentional delete operation.

Smart Back-Ups With System

The variety of potential problems makes it clear that every effective backup strategy needs to be multifaceted. Files should be stored on different destination mediums that provide protection against different types of threats. Also, real-time protection against sudden hardware failures is just as important as the existence of a non-erasable copy that is stored at a secure location. The degree of protection is directly proportional to the frequency of and variety of methods employed by the back-up operations, whereby complicated and smart dispersals are much more effective than gung-ho actions, which quickly grow weak due to effort involved. In this context, the term ‘smart’ refers to an arrangement that makes use of different backup types (e.g. hard drives, clouds, optical data storage etc.) in a manner that ensures that these types can play to their strengths, while their drawbacks are simultaneously balanced out by the other options.

Consequently, while you can create back-ups easily, automatically and quickly on a second hard drive, such backups can be deleted accidentally or destroyed by an encryption Trojan just as easily. Back-ups stored on optical data carriers are safe from both these dangers, but the cumbersome procedure is associated with frequent backup operations. Moreover, data stored on HDDs and diskettes can be threatened by age, loss or house fires. On the other hand, cloud storage can provide protection against the hazards mentioned above, but ends up raising questions about privacy and data protection.

Data security in three stages

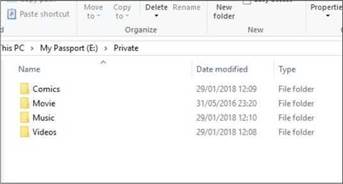

A good back-up plan begins with the task of organizing the data in question. The best thing to do would be to create a new “My Files” folder, and use it to store all your files that really need to be backed up. Apart from this, you should also create a “Private” folder for particularly sensitive documents and photos that should never get into the wrong hands (e.g. beach holiday photos). The back-up procedures are now carried out in three stages:

If an encryption Trojan like Petya has launched an attack, the data is essentially gone. A back-up can save the day under such circumstances

Sometimes even the professionals are powerless

Destroyed hardware (eg. due to a fire) is a frequent and – above all – abruptly- occurring cause of data loss

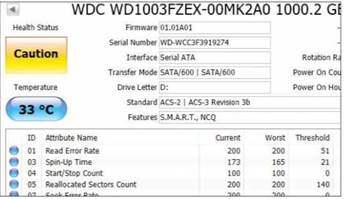

The CrystalDiskinfo tool displays the SMART values of drives. If there are abnormalities, you should replace the drive

Since many installed programmes use the Windows libraries without being asked, you should create storage areas for data that you want to back up. An extra “Private” folder is helpful

Pros & Cons: Hard drives as data archives

Classical magnetic hard drives are well-suited for extensive back-ups – if you keep certain things in mind

Very high capacities (up to 12 terabytes) possible on a single drive

Backups and recoveries can be executed quickly, easily and automatically

Relatively low price per gigabyte Back-ups can quickly be deleted / overwritten

Hardware defects rarely announce themselves

Service life can vary significantly

NAS hard drives like Western Digital’s Red or Seagate’s IronWolf models run quietly and coolly, while also offering long service lives

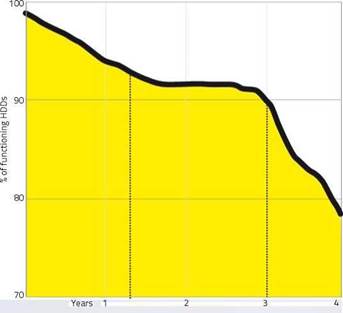

Failure risk of HDDs

Cloud backup provider Backblaze uses inexpensive consumer hard drives on a massive scale and heads off failures through a high level of redundancy. According to its statistics, a relatively high number of drives malfunction during continuous operations within the first 1.5 year. The survival rate then remains stable for a while, before falling steeply once again from the third year onwards.

Back-up stage 1: Real-time protection against hardware defects and accidents. The data is always synchronised to a second hard drive, a NAS or cloud storage. It remains accessible, which in turn necessitates the second back-up stage.

Back-up stage 2: Resistance to malware and operating errors.

The data is regularly backed up on a storage medium that is normally separated from the computer, or which can’t be written on by standard users.

As this necessitates user activity, it can be done when new files emerge (e.g. weekly or monthly).

Back-up stage 3: Catastrophe hazard insurance against a house fire or break-in/theft. Under this arrangement, a copy of the data would be stored outside the home (e.g. at the workplace, or with relatives). Due to the large amount of effort involved, it’s difficult to create this kind of backup too many times a year.

Below are various methods for each one of the aforementioned backup steps. We’ll start with hard drives, divided into internal, external and NAS. And when it comes to cloud services, we’ll explain how you can combine them with data protection and privacy. Finally, we’re going to demonstrate that there are still uses for the somewhat old-fashioned optical data medium.

HDDs: Inexpensive And Large Memories

When it comes to the quantities of data that have now become part of the new norm, there’s no avoiding hard drives, which are well-suited for all three back-up stages. In addition to offering a lot of capacity at affordable prices, they are also great for quick backups and recoveries. However, there’s a big weakness to HDDs. Although the statistical average indicates that they work for several years, some individual units can break down in just a few days or weeks after you start using them, and this cannot be predicted in advance. According to the service life statistics that cloud service provider Backblaze has collected at its computing center, the possibility of manufacturing defects means that you should assume that brand-new hard drives are as highly failure- prone as hard drives that have been in continuous use for three or more years. The latter scenario corresponds to a period of about five years of normal use. In this scenario, although the time for which the drive is deactivated is longer than the time for which it is activated, the frequent boot-up and shut-down procedures represent an additional strain due to the long-term usage.

For magnetic disks, redundancy is a must

This is why duplicated information constitutes the most important principle of back-ups on hard drives, meaning new files are ideally stored on at least two separate drives as quickly as possible. If you’re using a desktop PC, the easiest way to do this involves a second internal hard drive, onto which the data would be copied at regular intervals. This kind of data copying process can either be done manually or automatically.

You will be able to find a very wide range of 3.5-inch SATA drives. But in particular, NAS hard drives are the best choice for a data storage device, because they are designed for quiet and thrifty long-term usage, which in turn results in less heat generation and potentially longer service lives. The most well-known series of NAS models is Western Digital’s WD Red, while Seagate offers similar products with the IronWolf model range. Regardless of whether you end up choosing an NAS hard drive, or a normal hard drive that offers a higher level of performance, the longest service lives are those of HDDs that have been approved for continuous operation (’24/7′) by the manufacturers, and which come with a warranty of at least three years. 3.5-inch SATA hard drives can easily be installed in a desktop PC too. All you have to do is screw the device into a free bay, connect the SATA data and power cables and format the system after it has been booted up.

HDD Defect: Respond quickly and properly

Data that is stored on two separate drives will be safe if a defect affects only one drive. However, if something like this happens, you should immediately replace the broken HDD and copy the data onto a new one.

But do be aware that the prolonged copying could end up finishing off the drive that is still functioning, especially if it’s old, or has given you problems in the past. Because of that, you should always start off by copying only the most important data (i.e. the data that has not yet been stored on another medium during the previous backup operation). Once you have done that, copy the rest of the data after a cooling-off break.

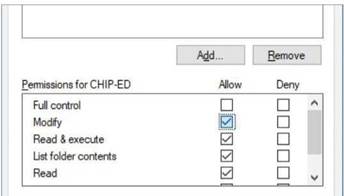

Making proper use of Windows Access Rights

Internal or permanently-connected external hard drives are not immune to accidental deletion or encryption Trojans. A certain amount of protection can be provided by the Windows access permissions. To set that up, access the Windows settings and create a standard user without administrator rights for daily tasks. Set the folder access rights so that this standard user has full access to your source folder that is to be backed up, but only has read access to the destination folders associated with the back-ups (right-click the folder, “Properties | Security | Edit …”). Now, add another standard user for the back-ups. This user should not only have read access to the source folders, but also full access to the destination folders. The back-up software must run under the backup user’s account. This can be done in the software, or with the help of the Windows task scheduler (“While executing … use following user account:”). Ransomware usually captures the standard account, so this particular arrangement can prevent ransomware from encrypting the back-ups. However, all this effort will be pointless as soon as the malware gains admin-rights.

This is why the second back-up stage is required. It will give you daily to monthly back-ups on an external drive that is isolated from the computer, except for the back-up passes themselves. All you would need for this is a simple 2.5-inch USB drive, but if the situation involves large amounts of data, we would recommend that you install a large and robust NAS hard drive in a 3.5-inch USB casing. Alternatively, you could go ahead and invest in one of the most convenient local backup solutions: A NAS (network attached storage) device.

NAS: Convenient and secure back-ups

A good NAS device provides perfect real-time protection, because it will constantly backup all new files. The Synology Cloud Station and Qnap QSync are examples of good NAS. Operations of the two systems are quite different from each other, with detailed instructions to be found on the NAS manufacturers’ websites. However, a NAS system can only provide protection against hard drive defects if it has at least two drives (‘bays’ in NAS jargon = drive slots). The 2-bay or 4-bay systems usually combine two drives to form a single RAID-1 volume, which

it’s very easy to install an additional 3.5-inch SATA hard drive in a PC. Just screw it in tight, connect it, and you’re done

When it comes to the Windows folder permissions for the backup destination folder, the standard user should only be granted reading access. if malware seizes the account, it won’t be able to delete the backups

Comfort backup A NAS can simultaneously take care of two back-up stages, which can be very convenient for home networks with multiple PCs

The web interface of the NAS systems can be used to activate a real-time file synchronisation facility (here: Synology CloudStation), with which the client software on the Windows PC communicates

HDD protection with FreeFileSync

The open-source FreeFileSync tool allows you to set up a continuous real-time synchronisation operation to the hard drive.

In order to use FreeFileSync to carry out a file synchronisation operation, you should

first create a normal synchronisation procedure by selecting the source and destination folders, as well as the type of synchronisation…

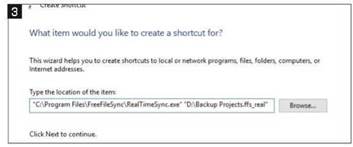

An entry in the autostart menu ensures that RealTimeSync always runs in conjunction with the previously-defined sync batch file, in order to immediately copy new and modified files into the destination folder

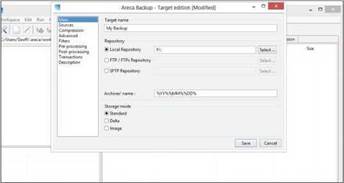

Back-ups with version history

The Areca Backup tool creates back-up archives, which contain older versions of the backed-up files as well

simultaneously saves each file on both drives.

However, instead of doing this, you can also choose to activate the second back-up stage (malware resistance) immediately. You can do so by setting up both hard drives as separate volumes, instead of setting them up as a RAID-1 volume. Then, you should save the real-time back-ups from the PC on volume 1. Now, use this device and the versioned backup solution of the NAS device to regularly create snapshots on the second volume, with each snapshot containing the exact condition of the back-up folder at the time of the snapshot in question. For Synology and Qnap, such solutions are called ‘Hyper Backup’ and ‘Hybrid Backup’, respectively; instructions for either solutions can be found on the manufacturers’ websites. If you set the access rights for the snapshot destination folder such that the PCs do not have writing access to it, it would essentially amount to an effective malware protection facility.

Once this system has been set up, a NAS device can comfortably cover the first two back-up steps, even for multiple computers in the home network. However, those who also want to protect their data from plundering intruders or house fires will have to store additional copies outside the home. This can be done using either a cloud service, an external hard drive or optical discs.

Real-time synchronisation on an HDD

The easiest way to handle back-up stage 1 of our plan (real-time protection) involves using the synchronisation tool of a NAS system or cloud service. After the installation procedure has been completed, all you have to do is set up the folders that are to be monitored. Once you’ve done this, everything else is done automatically. However, the task of creating the same functionality with the help of local hard drives and freeware is more cumbersome. This task can be carried out with the help of the open-source FreeFileSync software. After launching the tool, use the “Select” button under the “Compare” knob to select the source folder in the file system which is to be monitored (caution: this doesn’t work with Windows libraries like “Pictures”). Now, use the right-side “Select” button under “Synchronise” to select the destination folder on the second drive. Click the arrow between the green gear wheel and “Synchronise” and select the “Update” option. The “Compare” button displays the differences, with all the files and folders in the source directory displayed for the first pass. And the first synchronisation operation (in which all the files are copied) can also be started immediately. Now, select the “File | Save as batch job” option, activate the “Execute while minimised” option in the subsequent dialogue, select the “At the end: Finish” option and assign a unique name.

Now, enter the following into the address bar of a Windows Explorer window: “shell:startup”, [Enter]. Right-click within the empty window and select “New | Linkage”. Go to the “Enter the memory location of the element” section, and paste (all in quotation marks) the complete path leading to “RealTimeSync.exe”, a space and the path leading to the previously-saved batch file (refer to the screenshot on the left). From that point onwards, RealTimeSync will monitor the source folders that were selected previously during the above steps and copy all new and modified files into the destination folders.

Software alternatives for sync and back-up

A very effective – but somewhat complicated to set up – alternative to back-up stage 1 (real-time protection) is the Snapshot script. Originally made by and for the world of Linux, we introduced it in a feature article last year in our June 2017 issue. It creates complete snapshots of the source directories in a quick and space-saving manner, so that even older file versions are retained.

The only other way to do this is to use classical backup software like Areca Backup, which is well-suited for back-up stage 2 of our plan. Make sure you select the right version (since the 32-bit version is not compatible with a 64-bit version of Windows) during the installation process. If necessary, you should also update your Java installation. After the application has been launched, click the “Edit | New destination …” option. Go to the “General” section, choose a name for the backup job and set a “Local folder” as the destination folder (e.g. on the external backup hard drive). Now, go to the “Sources” section, select the source folders containing the data that is to be backed up and use the “Compression | Compression: None” setting, because it doesn’t help with involving files that occupy a lot of storage, such as photos and videos.

Use the “Execute | Simulate back-up” option to find out which files are going to be copied, then you can start the backup operation. This method is best-suited for stage-2 back-ups. Regardless, you will have to start this kind of backup operations manually after connecting an external drive.

Cloud: ‘Eerily’ Convenient

Many users understandably have reservations about uploading their files to third-party servers, especially if the servers are in the USA or somewhere else in the world; it’s not clear who has or could gain access to them. Furthermore, you can only do cloud-related work in a reasonable manner if you have a fast internet connection that offers an upload speed of at least five Mb/s and there are many locations or countries that don’t have this luxury. On the other hand, it can be quite tempting to be able to place responsibilities regarding the continued existence of the files on the shoulders of professional computing centres. Furthermore, cloud storage also has the alluring prospect of being providing access to the files from anywhere and share them with others easily. There are two ways out of this particular dilemma. You could either find (and pay) a service provider who promises to offer a level of data protection that is as wide-ranging as possible, or you could encrypt the files yourself before you upload them to the cloud service. If you choose the latter, you would

Pros & Cons: Cloud storage as an immediate back-up

Although cloud storages are very convenient, they usually leave many questions unanswered when it comes to data protection, so selecting the provider and the data to be uploaded is critical

Professional infrastructure offers the best possible protection against loss of data

Can be expanded almost at will in case of increasing amounts of data

Device-spanning and location-spanning: Security even in case of device losses or house fires

Faith in the provider is required. But scepticism is sometimes advisable, especially when it comes to American providers Speed & usability depend very strongly upon the available internet speed

Monthly costs and if the price increases in the future, switching providers can often be complicated

Cloud-backup of mobile phone & PC

1 Google Backup & Sync is the PC client software that synchronises any folders and external drives with the Google cloud

2 Just like the other cloud services, Google Drive also offers a smartphone app, so that you will also be able to access the files when you’re on the move

| An overview of cloud memories | Backblaze | Dropbox | Google Drive | Microsoft

OneDrive |

Strato HiDrive | Tresorit |

| Website | backblaze.com | dropbox.com | drive.google.com | onedrive.live.com | free-hidrive.com | tresorit.com |

| Server location | USA | USA | USA | USA 2 | Germany | Ireland,

Netherlands |

| Capacities (monthly price) | Unlimited (5 USD per PC) | 2 GB (free)

1 TB (9.99 USD) 2 TB (19.99 USD) |

15 GB (free) 100 GB (RM8.49) 1 TB (RM42.99) 10 TB (RM429.99) | 5 GB (free)

50 GB (RM9.49) 1 TB (RM280) 4 5 10 TB (RM380) 4 |

100 GB (2.99 USD) 250 GB (5.90 USD) 1 TB (9.90 USD)

5 TB (39.90 USD) |

200 GB (12.50 USD) 2 TB (30 USD) |

| Sync clients – Win/Android/iOS/Web access | o/1/1/o | o/o/o/o | O/O/O/O | O/O/O/O | O/O/O/O s | O/O/O/O |

| 2-factor login/End-to-end-encrypted | 0/0’ | o/1 | o/1 | 1/1 | 0/0 |

1 A passphrase must be uploaded in order to decrypt/download. 2 For an additional charge for business customers: Office 365. * Back-up for an additional charge * In case of annual billing.

Google’s terms of use grant the company terrifyingly wide-ranging rights of use for uploaded data

if you have a large webspace or your own server, you can use Nextcloud to set up your own cloud solution

Encrypted in the cloud

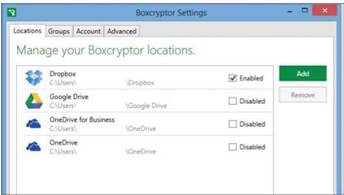

Boxcryptor can upload files to the cloud in a manner that ensures that the provider, intelligence agencies and hackers cannot access them. It does so by encrypting each file before it uploads it.

> How it works: The cloud service provider’s synchronization software should already be set up. You must create a user account, during installation, and install a virtual drive (in our case, “X:”). This virtual drive contains subfolders for the cloud services that have been found (the free version of Boxcryptor only supports a single service). When you use the virtual drive to switch over to the folder in question, Boxcryptor asks whether the element in question should be encrypted. This question applies to all the files/folders that have been copied here or newly created. If you answer in the affirmative, the tool encodes the file and copies it to the cloud folder with the extension “.bc”. These files will then no longer be readable in the web interface of the cloud service.

Files that are pushed into the cloud via the virtual Boxcryptor drive are automatically encrypted at the local level beforehand, and they are decrypted when they travel in the other direction be encrypting the files with the help of a locally-retained key (see ‘Encrypted in the cloud’).

Choosing the right provider

Dropbox invented cloud storage for private users, while Google spread the concept of an online storage far and wide by offering it as a free Gmail bonus, and especially by linking it to its online office feature. Just like their competitors, these two players also offer a few Gigabytes’ worth of memory for free. However, that’s not enough for heavy-duty back-ups. Furthermore, American providers seem to think poorly of the idea of data protection. This is what Google’s terms of use have to say: ‘When you upload, submit, store, send or receive […], you give Google a worldwide license to […] reproduce, […] modify, […] publish […] and distribute such content.” This doesn’t just apply to the free entry-level package, but also to paid options that provide more capacity.

In essence, you should only use cloud service providers whose servers lie in the US to store uncritical files (e.g. downloaded software and media or landscape and architecture photos) in an unencrypted manner. When it comes to images containing people and private documents, you should only upload such items to these services within an encrypted Boxcryptor folder (see bottom left). However, the Backblaze backup service is somewhat exceptional in this regard, because it does at least offers a type of end-to-end encryption. In this case, you would have no choice but to believe the provider’s claim that the encryption and decryption passwords that are to be entered into the web interface for recovery are not stored.

While some providers are quite clean from a legal point of view, there are some like Tresorit that sets itself apart from the rest through its technical security features. This applies in particular to the service’s full- fledged end-to-end encryption that is based on the zero-knowledge principle, which states that the password only remains with the user to ensure that the provider cannot access the data in any way.

Storage capacities and handy sync software

From our experience, most cloud storages services offer synchronisation software for Windows (some even for Android and iOS). All you have to do is set the folders to be synchronised once. After that, new or modified files will immediately be uploaded to the cloud storage. This setup is great for back-up stage 1 (real-time protection) and complements back-up stage 3 (catastrophe hazard insurance), so that you won’t have to put aside full back-ups elsewhere too frequently. However, since even cloud services themselves can break down or become unreachable (e.g. in situations involving long-term internet disruptions), you should never forego a regular stage-2 back-up. The cloud clients also provide synchronisation operations across multiple devices. For example, a folder can be kept synced across two or more computers, as well as the cloud. Depending on the size of the online storage, it can be somewhat tight on the wallet for some. An offer of one to two terabytes for about RM50 to 80 per month is quite reasonable in terms of price, as long as you don’t project the costs over a period of several years. No capacity limits whatsoever are imposed by Backblaze, which uploads all the contents of all internal and external drives of the computer by default (however, you can only back up individual directories).

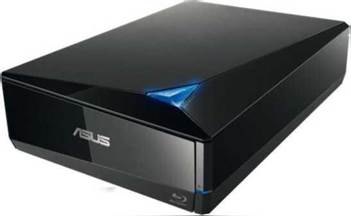

Optical Discs: Can’t Be Erased & Long-Lasting

DVDs and Blu-rays are cumbersome in comparison with hard drives, especially when compared with cloud storage. This is due to their limited capacities, as well as the fact that you have to construct each disk individually and burn it in a time-consuming manner. However, they also have advantages. Case in point, data that is stored on the write-once mediums – that we’re recommending for back-up stage 3 – can no longer be modified or deleted. They are also small and inexpensive, which in turn makes it easy for you to store them away from your home. Since such back-ups are only made a few times a year, the complicated procedure doesn’t really look like that big a deal, and it also provides a good opportunity to sort unimportant things out of the pile of data. The issue of whether you’ll need additional hardware depends upon the amount of data that is to be archived. If it’s for office documents, thousands of them can fit onto a DVD-5 (capacity of 4,480 MB), which can be burned by any computer that has an optical drive. But before you go ahead and burn more than two or three DVDs, it would be better if you do yourself a favour by obtaining a Blu-ray burner, which can write mediums with capacities lying in the range of 25 to 50 GB. Internal models for desktop computers are available at prices ranging from around RM300 onwards, while external Blu-ray burners can be obtained at prices ranging from RM450 onwards.

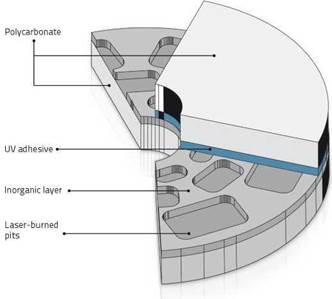

Service life not unlimited

When data is written onto an optical medium, a laser literally burns the bits into a layer. By doing so, the bits are supposed to last forever.

However, with conventional DVDs and Blu-rays, the aforementioned layer is made up of organic material, which degenerates after a few years if the device is stored under excessively damp and warm conditions. It would therefore make sense to burn all the data onto new blank disks every year, retain two to three generations and dispose of the older pieces. Another option is to go for M-Disc-DVDs or Blu-rays, which have significantly longer service life, though both require a compatible disc burner. The advantage of the two types of disc is that its writeable layer is made up of inorganic material, which is supposed to be able to survive for a thousand years (according to the inventors). However, what’s less clear is whether the polycarbonate medium material remains optically neutral and doesn’t get distorted for such a long time. Regardless, since the actual service life only becomes apparent when the medium begins to lose its readability, you shouldn’t let things reach such a level even if you’re using them. In other words, always try to keep multiple copies if you plan to use these kinds of discs.

Organising and burning data

When it comes to burnin, it is difficult to accommodate everything without giving away a whole lot of capacity. If you don’t want to stress over what’s going to fit onto which DVD, you could use the 7-Zip compression programme to put all the folders to be burned into a ZIP archive. To do so, simply enable the “Compression strength: Save” setting and use the “Split into part files:” option to select the desired media size. Once this has been done, each part file will fit onto a single medium. If you’re storing the mediums with relatives, you could also go ahead and enable an encryption system that requires a password. As is always the case with encrypted data, you will have to make sure you don’t forget this password under any circumstances, or you might end up securing the data from yourself!

Pro & Cons: DVD/Blu-ray as a permanent archive

The intricateness of optical data media is both an advantage and a disadvantage, mainly because data can no longer be deleted or manipulated once it has been burned onto a DVD or Blu-ray

Back-ups normally cannot be deleted or manipulated Relatively long service life if stored properly

Relatively low costs per medium; easy to store, so optimal for back-ups that are to be stored away from home Backups and recoveries are quite cumbersome and time-consuming

Inflexible: Relatively small and unchangeable media sizes of 4.4 or 25 GB

Slow writing and reading speeds

If you buy an optical drive these days, it should be a Blu-ray burner with M-Disc support, which is available as an internal or external USB model

M-Disc: The data should become as old as the hills

Normal mediums store data in an organic layer. When an M-Disc is written, the laser burns holes into an inorganic layer, whose composition is similar to that of stone